Science and Technology

Context:

- In William Golding’s famous novel, Lord of the Flies, Jack emphasises the importance of following rules and establishing a system of governance among the boys.

- Self-regulation or no regulation can be disastrous at times.

- This lesson is particularly relevant in the context of Artificial Intelligence (AI) regulations and deepfakes in India.

Deep synthesis:

- It is defined as the use of technologies, including deep learning and augmented reality, to generate text, images, audio and video to create virtual scenes.

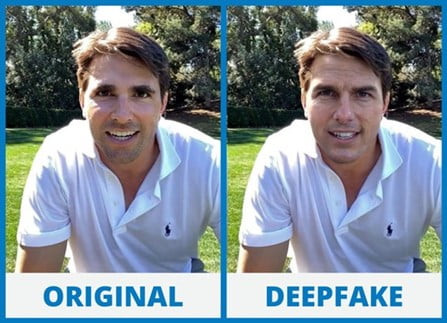

- One of the most notorious applications of the technology is deepfakes, where synthetic media is used to swap the face or voice of one person for another.

Deepfakes

- It leverages powerful techniques from machine learning (ML) and artificial intelligence (AI) to manipulate or generate visual and audio content with a high potential to deceive.

Methodology:

- A neural network needs to be trained with lots of video footage of the person – including a wide range of facial expressions, under all kinds of different lighting, and from every conceivable angle – so that the artificial intelligence gets an in-depth ‘understanding’ of the essence of the person in question.

- The trained network will then be combined with techniques like advanced computer graphics in order to superimpose a fabricated version of this person onto the one in the video.

- The very latest technology, however, such as Samsung AI technology that has been created in a Russian AI lab, makes it possible to create deepfake videos using only a handful of images, or even just one.

Application examples:

- Deepfake technology will enable us to experience things that have never existed, or to envision a myriad of future possibilities.

- Researchers at Samsung’s AI lab in Moscow, for instance, recently managed to transform Da Vinci’s Mona Lisa into video.

- Editing video footage without the need for doing reshoots, or recreating artists that are no longer with us to perform their magic, live.

- Produce speech from text and edit it just like you would images in Photoshop

- For example, Adobe’s VoCo software

- Deep generative models offer new possibilities in healthcare

- help researchers develop new ways of treating diseases without being dependent on actual patient data

Issues:

- Lack of proper regulations creates avenues for individuals, firms and even non-state actors to misuse AI.

- Legal ambiguity, coupled with a lack of accountability and oversight, is a potent mix for a disaster.

- Policy vacuums on deepfakes are a perfect archetype of this situation.

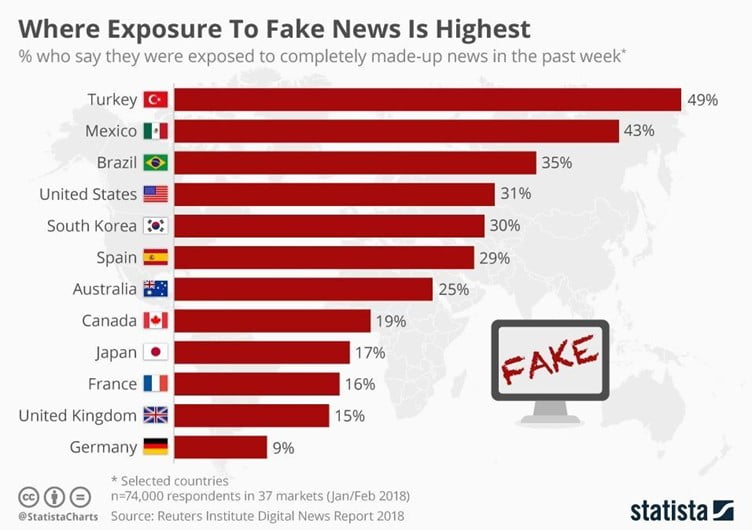

- Since they are compelling, deepfake videos can be used to spread misinformation and propaganda.

- They seriously compromise the public’s ability to distinguish between fact and fiction.

- There has been a history of using deepfakes to depict someone in a compromising and embarrassing situation. For instance, there is no dearth of deepfake pornographic material of celebrities.

- Such photos and videos do not only amount to an invasion of privacy of the people reportedly in those videos, but also to harassment.

- Deepfakes have been used for financial fraud.

- Recently, scammers used AI-powered software to trick the CEO of a U.K. energy company over the phone into believing he was speaking with the head of the German parent company. As a result, the CEO transferred a large sum of money — €2,20,000 — to what he thought was a supplier.

- The audio of the deepfake effectively mimicked the voice of the CEO’s boss, including his German accent.

Creating tensions in the neighbourhood:

- There are three areas where deepfakes end up being a lethal tool in the hands of India’s non-friendly neighbours and non-state actors to create tensions in the country.

- Influence elections

- Recently, Taiwan’s cabinet approved amendments to election laws to punish the sharing of deepfake videos or images due to becoming increasingly concerned that China is spreading false information to influence public opinion and manipulate election outcomes

- Espionage activities

- Doctored videos can be used to blackmail government and defence officials into divulging state secrets.

- In 2019, the Associated Press identified a LinkedIn profile under the name Katie Jones as a likely front for AI-enabled espionage.

- In March 2022, Ukrainian President Volodymyr Zelensky revealed that a video posted on social media in which he appeared to be instructing Ukrainian soldiers to surrender to Russian forces was actually a deepfake.

- These deepfakes could be used to radicalise populations, recruit terrorists, or incite violence.

- Deny the authenticity of genuine content, particularly if it shows them engaging in inappropriate or criminal behaviour, by claiming that it is a deepfake.

- This could lead to the ‘Liar’s Dividend,’ – This refers to the idea that individuals can exploit the increasing awareness and prevalence of deepfake technology to their advantage by denying the authenticity of certain content.

Legislation and challenges:

- Currently, very few provisions under the Indian Penal Code (IPC) and the Information Technology Act, 2000 can be potentially invoked to deal with the malicious use of deepfakes.

- Section 500 of the IPC provides punishment for defamation.

- Sections 67 and 67A of the Information Technology Act punish sexually explicit material in explicit form.

- The Representation of the People Act, 1951, includes provisions prohibiting the creation or distribution of false or misleading information about candidates or political parties during an election period.

- The Election Commission of India has set rules that require registered political parties and candidates to get pre-approval for all political advertisements on electronic media, including TV and social media sites, to help ensure their accuracy and fairness.

- However, these rules do not address the potential dangers posed by deepfake content.

- There is often a lag between new technologies and the enactment of laws to address the issues and challenges they create.

- In India, the legal framework related to AI is insufficient to adequately address the various issues that have arisen due to AI algorithms.

Suggestions:

- The Union government should introduce separate legislation regulating the nefarious use of deepfakes and the broader subject of AI.

- Legislation should not hamper innovation in AI, but it should recognise that deepfake technology may be used in the commission of criminal acts and should provide provisions to address the use of deepfakes in these cases.

- Antivirus for deepfakes – Sensity, an Amsterdam-based company that develops deep learning technologies for monitoring and detecting deepfakes, has developed a visual threat intelligence platform that uses the same deep learning processes used to create deepfakes, combining deepfake detection with advanced video forensics and monitoring capabilities.

- Social media deepfake policies – For instance, Instagram’s and Facebook’s policy is to remove ‘manipulated media’ – with the exception of parodies.

Best practices from the world:

- The European Union’s Code of Practice 2018

- It brought together for the first time worldwide industry players to commit to counter disinformation.

- Signed by online platforms Facebook, Google, Twitter and Mozilla, as well as by advertisers and other players in the advertising industry including Microsoft and TikTok.

- If found non-compliant, these companies can face fines as much as 6% of their annual global turnover.

- U.S’s bipartisan Deepfake Task Force Act 2021

- To assist the Department of Homeland Security (DHS) to counter deepfake technology.

- The measure directs the DHS to conduct an annual study of deepfakes — assess the technology used, track its uses by foreign and domestic entities, and come up with available countermeasures to tackle the same.

- China’s new policy to curb deepfakes

- The policy requires deep synthesis service providers and users to ensure that any doctored content using the technology is explicitly labelled and can be traced back to its source.

- Further, it requires to take the consent of the person in question

Way forward:

- We can’t always rely on the policy of self-regulation hence regulations are much needed in the field of AI such as the proposed Digital India Bill

- In order to minimise deception and curb the undermining of trust, technical experts, journalists, and policymakers will play a critical role in speaking out and educating the public about the capabilities and dangers of synthetic media.

- With increased public awareness, we could learn to limit the negative impact of deepfakes, find ways to co-exist with them, and even benefit from them in the future.

Source The Hindu